In a lovely example of synchronicity this week, a book and an article from The New York Times converged. The book is ON KILLING by Lt. Col. Dave Grossman. The article is “The Pentagon’s Terminator Conundrum: Robots That Could Kill on Their Own”. The book moves smoothly into the article, so let’s begin.

We are subjected to an incredible amount of violence via the popular media. I’m not specifically talking about children or video games. I’m talking about us, the global society that accesses Western or Western-aping popular culture. This includes video games, of course, but also movies and books, not to mention Top 100 music and their slick videos. There are death threats on Facebook, insults on Twitter, jeers on Instagram, and so on. No channel of popular media is free of it. We’re told that humans are an inherently violent species. “What other species kills members of its own species?” you’ve probably heard. Actually, quite a few, but let’s not let that stand in the way of a good bromide. Quentin Tarantino unleashes fables of bloodshed so bloody that you need to put a bucket under the screen to catch the excess body fluids. And yet…

Let’s take it from an actual soldier, and infantry at that! In Grossman’s book, ON KILLING (2009 revised edition), he deftly dissects the stories to expose the heart of the issue, and that is that even trained (or, as he rightly calls it, “conditioned”) soldiers don’t like killing other people.

Citing data from Richard Holmes and his book ACTS OF WAR, Grossman relates the hit rates of soldiers:

- 1897 (Rorkes Drift, British vs Zulu): Holmes estimates thirteen rounds of ammunition fired for each “hit” on an enemy.

- 1876 (Rosebud Creek, Americans vs Native Americans): 252 rounds per hit.

- 1870 (Battle of Wissembourg, French vs Germans): 119 rounds per hit.

- Vietnam War: an astounding 50,000 rounds for each person killed.

The statistics continue throughout the first part of his book, leading to the inescapable conclusion that humans don’t like to kill humans. It seems that we subconsciously recognise that to kill someone else is to hamstring our own future biological viability, and so we don’t do it. (Whatever “explanations” you may have for this myth of a “killer human” are comprehensively debunked by Grossman, so if you’re of a mind to argue the point, I’d tell you to read the book and come to our own conclusions. Note, we aren’t talking atrocities or psychopaths here, just run-of-the-mill state-sanctioned murder.)

Now we come to the problem. The United States is a failing empire. Concepts such as “Manifest Destiny” and documents such as “Plan for a New American Century” (PNAC) have bolstered a national machismo centered on global imperial overreach for many decades but–forget Russia or China!–when an upstart little nobody like the Philippines tells Uncle Sam quite openly that they are “separating”, then we can take it as read that something ground-breaking is in the air.

But the United States is very happy being Number One Cop in the world, and we must look at its reaction in light of recent events. To be fair, the strategists within the United States government are very smart people. Grossman may be writing about “killology” now (1995 for the original edition, 2009 for the revised), but the US Armed Forces has known for decades that it is very difficult to expect the average soldier to kill another human being. James Tiptree Jr. tackled this issue in her brilliant short story, YANQUI DOODLE (1987). I’ll quote the synopsis from Wikipedia as it’s a good one:

The US is fighting a full-fledged war in Latin-America and the Congressional Armed Services Committee is on a tour of the front and will soon stop at a field hospital to visit the wounded. Soldiers are encouraged to take government-issued drugs (mainly amphetamines) to become remorseless killing machines. A soldier is recovering from a landmine explosion in this field hospital and it quickly becomes clear that drug withdrawal is a far more serious concern than his wounds.

Has the United States Armed Forces done this? Fed their soldiers pharmaceuticals in order to enhance the killer instinct and suppress empathy, and then had to reap the consequences? In 2002, The Christian Science Monitor, in an article titled “Military looks to drugs for battle readiness”, stated that:

According to military sources, the use of such drugs (commonly Dexedrine) is part of a cycle that includes the amphetamines to fight fatigue, and then sedatives to induce sleep between missions. Pilots call them “go pills” and “no-go pills.” For most Air Force pilots in the Gulf War (and nearly all pilots in some squadrons), this was the pattern as well.

The drugs are legal, and pilots are not required to take them although their careers may suffer if they refuse. (my emphasis)

Mainstream media tends to paint the US military in glowing colours, so one has to roam a little off the designated paths to find articles such as this from 2006:

In Iraq and Afghanistan, when “suck it up” fails to snap a soldier out of depression or panic, the Army turns to drugs. “Soldiers I talked to were receiving bags of antidepressants and sleeping meds in Iraq, but not the trauma care they needed,” says Steve Robinson, a Defense Department intelligence analyst during the Clinton administration.

Sometimes sleeping pills, antidepressants and tranquilizers are prescribed by qualified personnel. Sometimes not. Sgt. Georg Anderas Pogany told Salon that after he broke down in Iraq, his team sergeant told him “to pull himself together, gave him two Ambien, a prescription sleep aid, and ordered him to sleep.”

…

“It concerns us when we hear military doctors say, ‘It’s wonderful that we have these drugs available to cope with second or third deployments,’” Joyce Raezer of the National Military Family Association told In These Times. (my emphasis)

Indeed.

When you have the problem that your trained killers basically don’t want to kill (a universal trait, I may add, across all races and cultures), and that your medicated killers come back with ongoing trauma that translates to bad press for the military, not to mention the financial drag of post-deployment medical care, the military needs to turn to other solutions.

And so we come to The New York Times article. In summary:

[T]he Pentagon has put artificial intelligence at the center of its strategy to maintain the United States’ position as the world’s dominant military power … It has tested missiles that can decide what to attack, and it has built ships that can hunt for enemy submarines, stalking those it finds over thousands of miles, without any help from humans.

They can identify ships of different stripes, hook up with each other to share intel, discriminate between tanks and buses, and generally carry out analysis without direct human control. Of course we’re told that the AI killers aren’t really that bad:

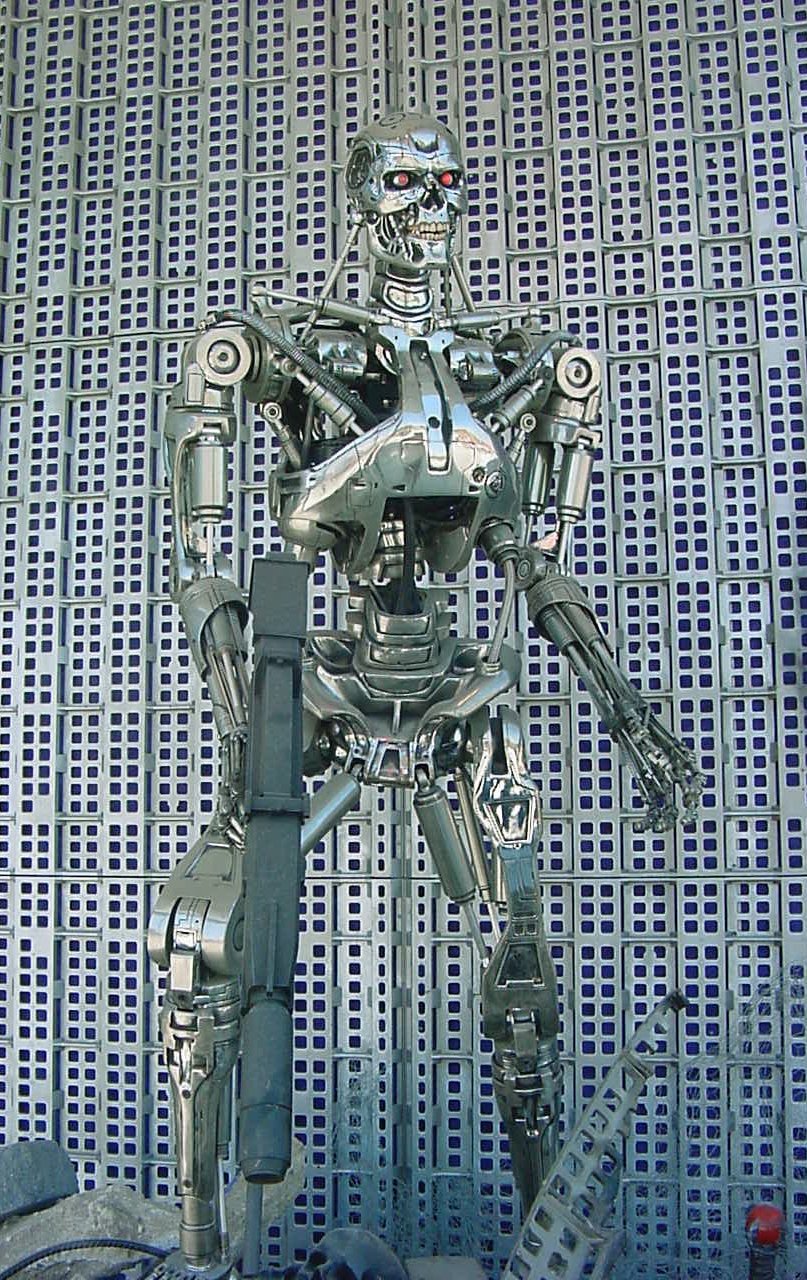

The weapons, in the Pentagon’s vision, would be less like the Terminator and more like the comic-book superhero Iron Man, Mr. [Robert] Work [deputy defense secretary] said in an interview.

“There’s so much fear out there about killer robots and Skynet,” the murderous artificial intelligence network of the “Terminator” movies, Mr. Work said. “That’s not the way we envision it at all.”

When it comes to decisions over life and death, “there will always be a man in the loop,” he said.

Did you see what they did there? “Comic-book superhero”. Sounds all exciting and comforting at the same time, doesn’t it? Wow, you’re thinking, they got this shit down! Iron Man! How cool is that?! What could possibly go wrong???!!! And if you adhere to that line of reasoning, I have some lovely apartment buildings in Yemen to sell you.

If you go to the NYT article I cite and take the link from one of the passages above, it will lead you to “Fearing Bombs That Can Pick Whom to Kill”. This article was from late 2014, so think about what the Pentagon is capable of now while you read of what an “experimental” missile did back then:

On a bright fall day last year off the coast of Southern California, an Air Force B-1 bomber launched an experimental missile that may herald the future of warfare.

Initially, pilots aboard the plane directed the missile, but halfway to its destination, it severed communication with its operators. Alone, without human oversight, the missile decided which of three ships to attack, dropping to just above the sea surface and striking a 260-foot unmanned freighter. (my emphasis)

There will always be a “man in the loop”, eh? Sorry, Bob, but I don’t believe you.

There’s another thing to notice about the date. The Pentagon are screaming about Russian and Chinese threats now as a justification for its AI programmes:

“China and Russia are developing battle networks that are as good as our own. They can see as far as ours can see; they can throw guided munitions as far as we can,” said Robert O. Work, the deputy defense secretary, who has been a driving force for the development of autonomous weapons

but in 2014, when the missile testing was reported upon, the world was a relatively quiet place. China and Russia weren’t doing anything at all. At that point, the United States was still hoping to pull “regional power”, “gas station masquerading as a country” Russia into a Ukraine adventure that had begun less than nine months before, and China was trying to keep a lid on its yoyo-ing currency in an effort to make it into the IMF’s SDR by the end of 2015.

Plus, we all know the extended timelines involved in any Pentagon defence project. Are we to believe that the missile tested in November 2014 that decided to disregard the “man in the loop” was developed in answer to threats that, as yet, didn’t exist? If, as Obama said in March 2014, “[Russia doesn’t] pose the number one national security threat to the United States” why would an active AI killer programme (one of several, I’m sure) already be at the stage of testing? Remember that:

Weapons manufacturers in the United States were the first to develop advanced autonomous weapons. An early version of the Tomahawk cruise missile [first put into production in 1983] had the ability to hunt for Soviet ships over the horizon without direct human control.

Despite the fact that the United States has been defeated in almost every war it’s entered since World War Two, the Pentagon has always been a firm and committed imbiber of the defence contractor kool-aid. Bigger. Better. Faster. The US already had a technological advantage over the rest of the world’s weaponry for decades (even if it all fell into a heap in various engagements) and hasn’t been shown up in any major way until, lest we say it, this year in Syria.

No, judging by the length of time autonomous units have been in service, the only reasonable conclusion we can come to is that the US AI programme was not developed as a counter to similar programmes going on in Russia and China. There’s no doubt there are similar programmes in play in those, and other, countries, but if those states are so advanced that, as Work put it, “China and Russia are developing battle networks that are as good as our own…”, why wasn’t this mentioned as a threat earlier? Surely Santa Claus didn’t descend the chimney and deposit all these shiny new production-level battle networks underneath the Russian and Chinese Christmas trees at the end of 2015?

Hmmm. Maybe US surveillance isn’t up to the hype they would like us swallow. Or maybe the United States doesn’t want a world of equals, regardless of its lofty rhetoric. The reason that the Pentagon is developing AI is, as they themselves stated it:

“What we want to do is just make sure that we would be able to win as quickly as we have been able to do in the past.”

Win? Threaten? If you read Grossman’s book, you’ll see that a war can easily be won through big enough threats (posturing), no AI deployment necessary.

But getting back to the continued development of such programmes. Will corners be cut? Will ethics be sidelined? Will there be “kill switches”, except not in the way we’re expecting: with the bomb severing communication instead of the “man in the loop” ordering a stand down? Take this example:

Back in 1988, the Navy test-fired a Harpoon antiship missile that employed an early form of self-guidance. The missile mistook an Indian freighter that had strayed onto the test range for its target. The Harpoon, which did not have a warhead, hit the bridge of the freighter, killing a crew member.

Despite the accident, the Harpoon became a mainstay of naval armaments and remains in wide use.

One wonders where the “man in the loop” was in that particular instance? And, again, note the date. 1988. Dear reader, do you know where China and Russia were in 1988? If you said, desperately trying to hold their countries together, you wouldn’t be too far off the mark.

So we have (a) long-standing AI programmes that go back decades, (b) an inflexible win-win-win attitude, (c) lies regarding oversight of weapons of mass destruction that can make their own decisions and execute them, (d) lacksadaisical, if not completely absent, shutdown protocols, all from (e) a nation-state that feels the need “to pick up some small crappy little country and throw it against the wall, just to show the world we mean business” every decade or so (Michael Ledeen).

At this point, I would normally say that the only thing standing between a future generation and the genocide of the human species would be a mother of a solar flare, also known as an EMP. But I doubt we’ll need to pray for such solar intervention. Why? Well, thank the gods for the basic greed and incompetence of United States defence contractors. Exhibit A: the F-35 strike fighter. Exhibit B: the Zumwalt-class destroyer. Just search on “problems with” and you’ll get the idea.

The truth is, without American defence contractors, we might actually be seeing a Terminator robot clumping down the world’s streets in the near future. But we won’t. Or, maybe it may happen in North America, or the Five Eyes, but I doubt they’ll make an appearance where the rest of us live. Lockheed Martin, thanks for being humanity’s friend.

—

For further reading of drug use in the military, try this nice round-up from Circle of 13

Grossman’s book is available from The Book Depository

This article © Kaz Augustin, 2016

* If you liked this article,please consider the Paypal tipjar in the site’s sidebar. I’ll be trying to hit big issues in ways that may not be obvious twice a month, on the first and fifteenth, and every cent helps. Thank you.